Illustration

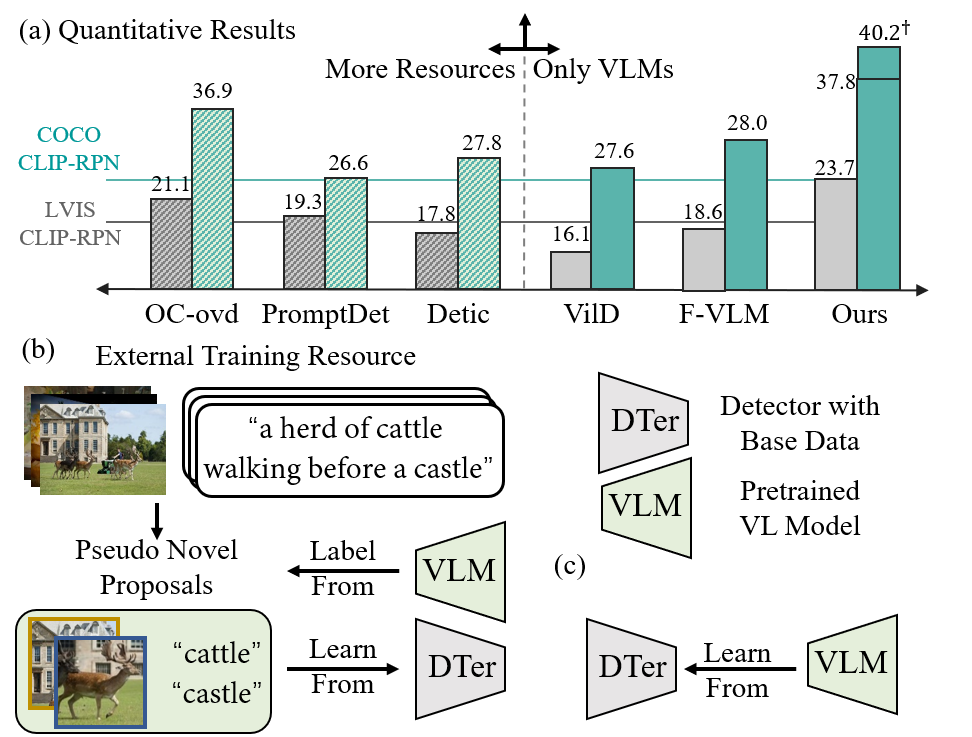

Different approaches to building an open-vocabulary detector: (a) generate pseudo “novel” proposals from extra train- ing resources and VLMs, or (b) generalize from VLMs, and (c) their performance comparison. †: with self-training

Abstract

Vision-language models such as CLIP have boosted the performance of open-vocabulary object detection, where the detector is trained on base categories but required to detect novel categories. Existing methods leverage CLIP's strong zero-shot recognition ability to align object-level embeddings with textual embeddings of categories. However, we observe that using CLIP for object-level alignment results in overfitting to base categories, i.e., novel categories most similar to base categories have particularly poor performance as they are recognized as similar base categories. In this paper, we first identify that the loss of critical finegrained local image semantics hinders existing methods from attaining strong base-to-novel generalization. Then, we propose Early Dense Alignment (EDA) to bridge the gap between generalizable local semantics and object-level prediction. In EDA, we use object-level supervision to learn the dense-level rather than object-level alignment to maintain the local fine-grained semantics. Extensive experiments demonstrate our superior performance to competing approaches under the same strict setting and without using external training resources, i.e., improving the +8.4% novel box AP50 on COCO and +3.9% rare mask AP on LVIS.

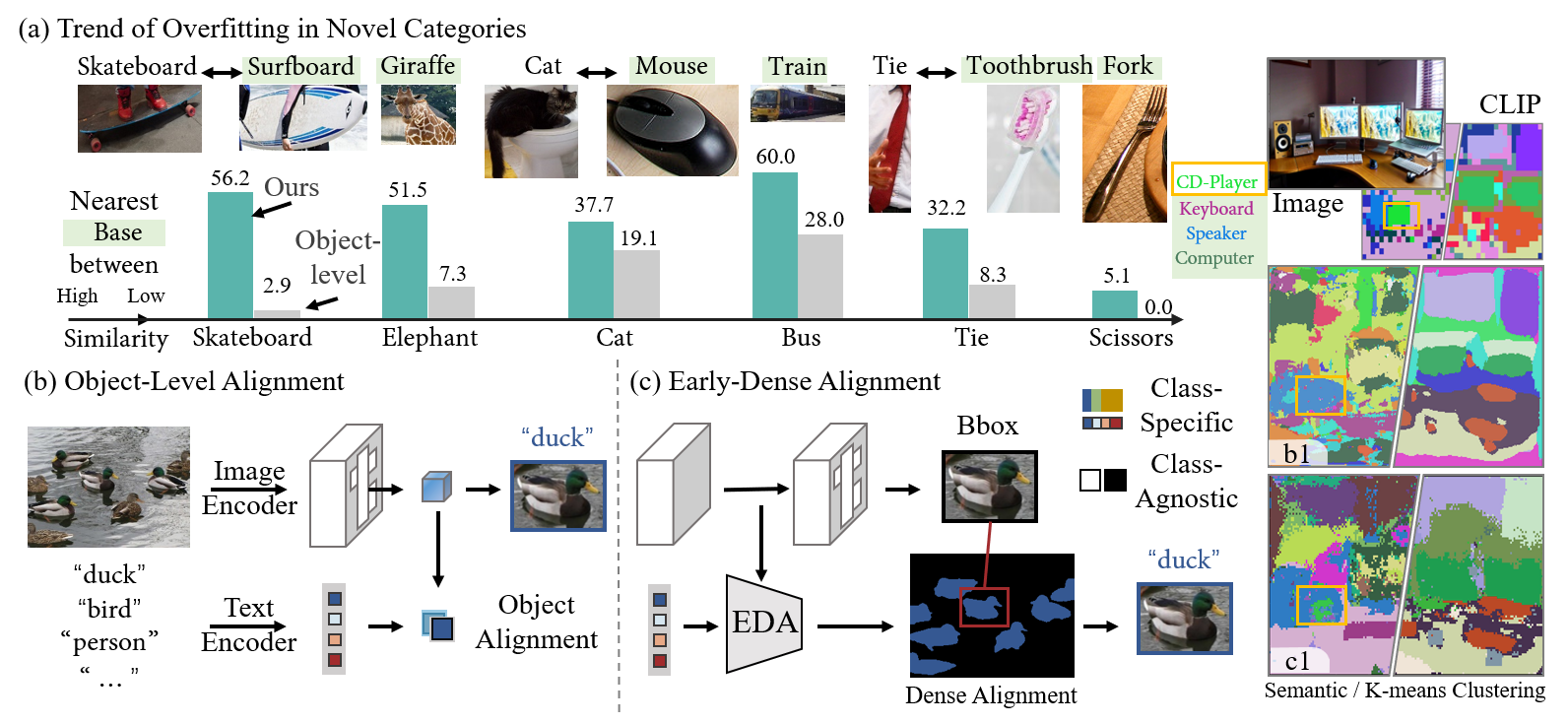

The comparison between Object-level Alignment and our Early Dense Alignment (Eda) on (a1)-(b1) architectures, (a2)-(b2) local image semantics and clustering results, and (c) box AP of novel categories similar to base categories. We list six novel categories most similar to base categories by calculating the average similarity between the randomly sampled thousands of novel objects' visual features and base categories' text embeddings. Our Eda: (1) successfully recognizes the fine-grained novel CD-player that is predicted to base speaker by object-level alignment; (2) better groups local image semantics into object regions compared with CLIP; (3) achieves a much higher novel box AP for predicting novel objects similar to base objects, showing that Eda can distinguish fine-grained details of similar novel and base categories. In contrast, object-level alignment overfits base categories.

Method

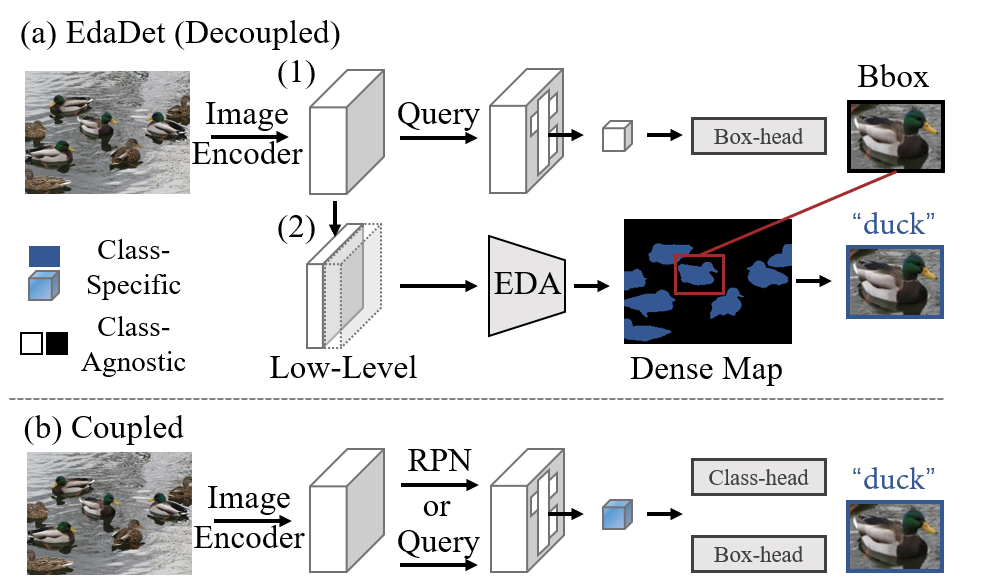

Architecture comparison between (a) our deeplydecoupled EdaDet and (b) existing open-vocabulary detection framework. EdaDet separates the open-vocabulary classification branch from the class-agnostic proposal generation branch at a more shallow layer of the decoder. EdaDet first individually generates object proposals and predicts dense probabilities to categories for local image semantics and then computes object proposals' categories based on the dense probabilities.

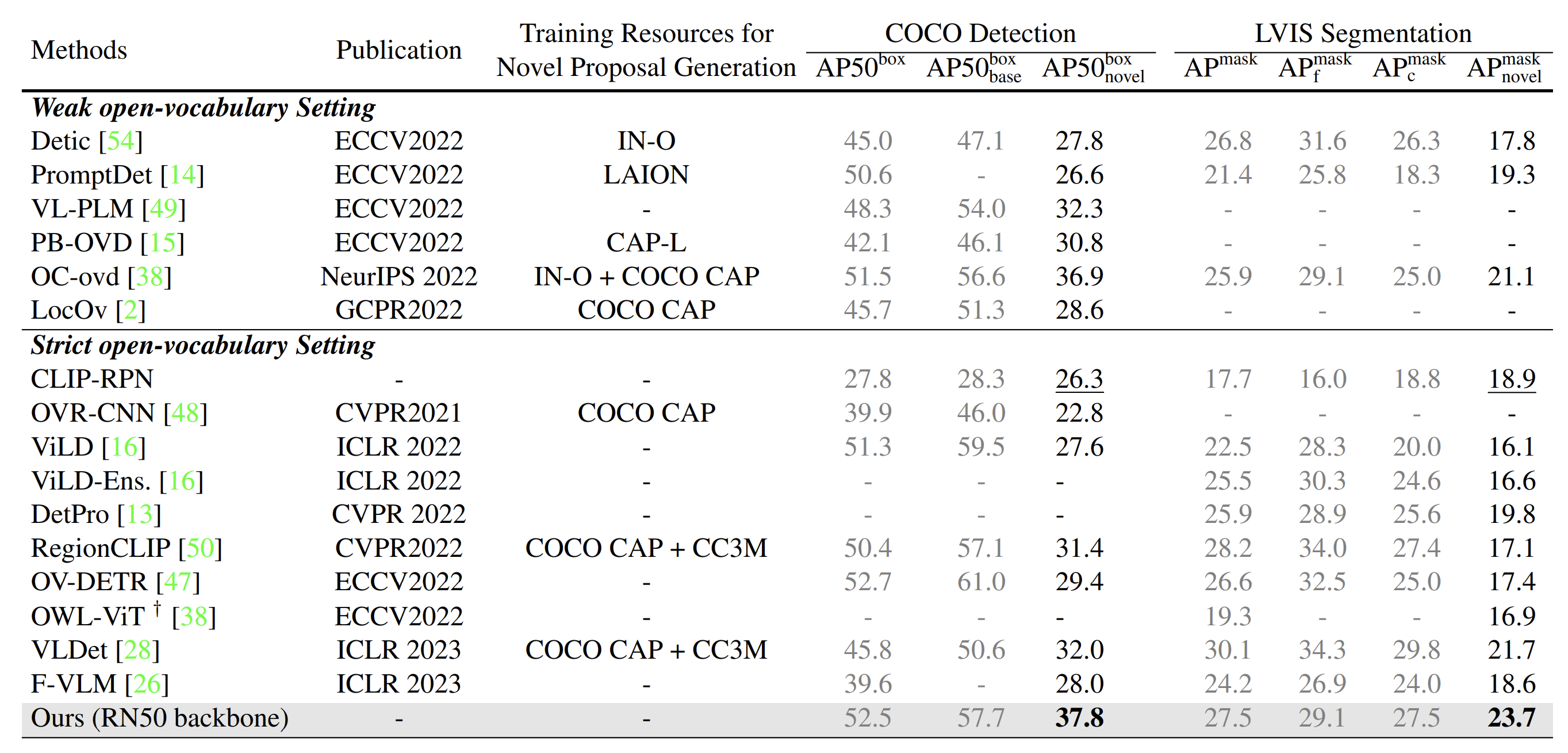

Open-vocabulary object detection results on COCO and LVIS datasets.

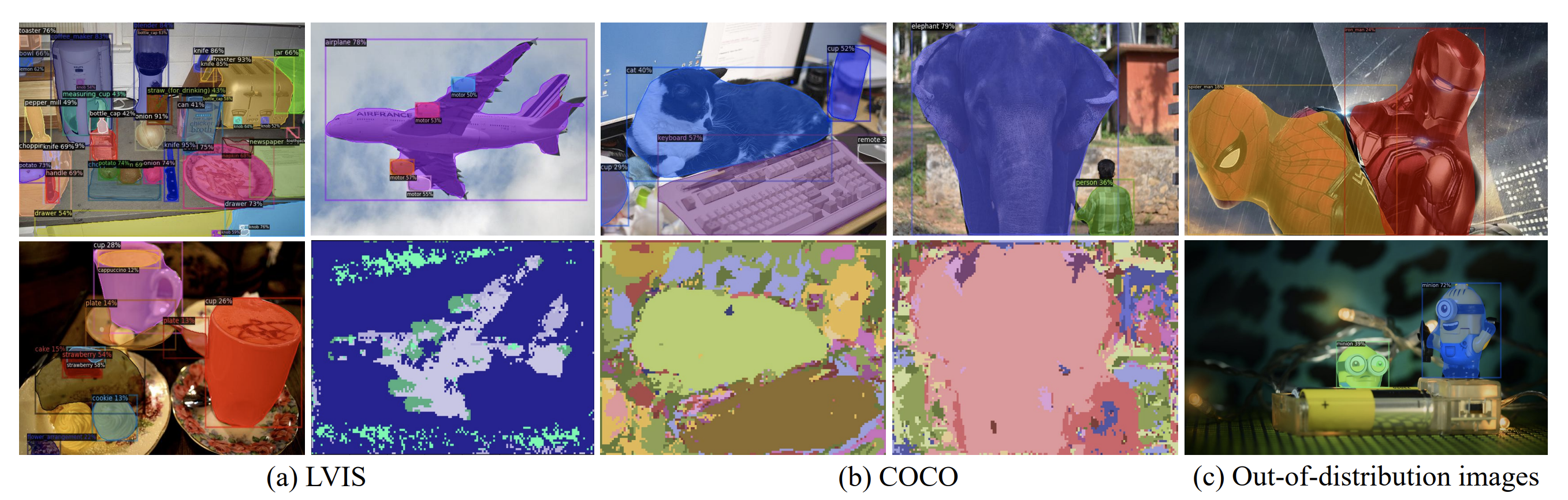

Visulization

We visualize our detection results and semantic maps on (a) LVIS [17], (b) COCO [29] and (c) out-of-distribution images from the open-source website.